Merge branch 'master' of https://gitlab.aicrowd.com/flatland/neurips2020-flatland-starter-kit

# Conflicts: # apt.txt

Showing

- src/extra.py 26 additions, 85 deletionssrc/extra.py

- src/images/adrian_egli_decisions.png 0 additions, 0 deletionssrc/images/adrian_egli_decisions.png

- src/images/adrian_egli_info.png 0 additions, 0 deletionssrc/images/adrian_egli_info.png

- src/images/adrian_egli_start.png 0 additions, 0 deletionssrc/images/adrian_egli_start.png

- src/images/adrian_egli_target.png 0 additions, 0 deletionssrc/images/adrian_egli_target.png

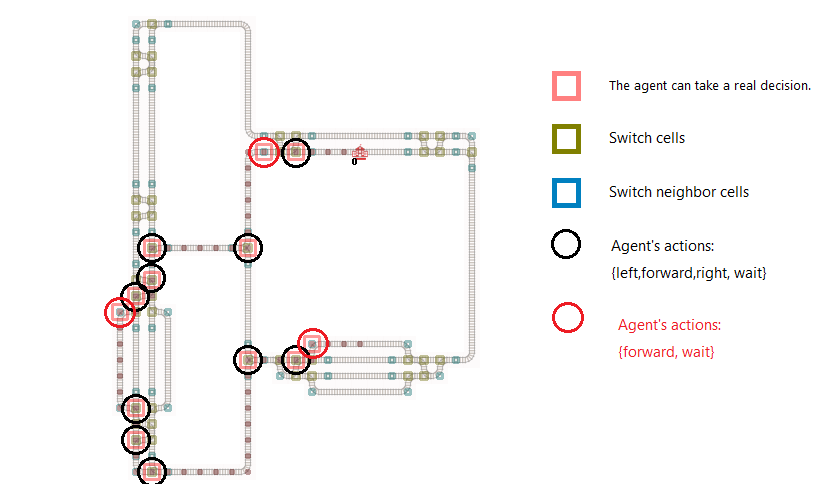

src/images/adrian_egli_decisions.png

0 → 100644

49.2 KiB

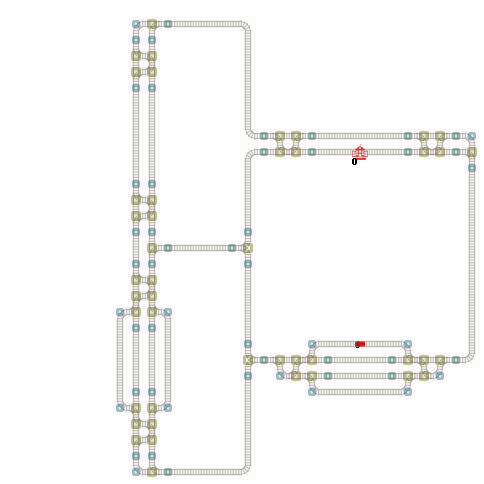

src/images/adrian_egli_info.png

0 → 100644

47.8 KiB

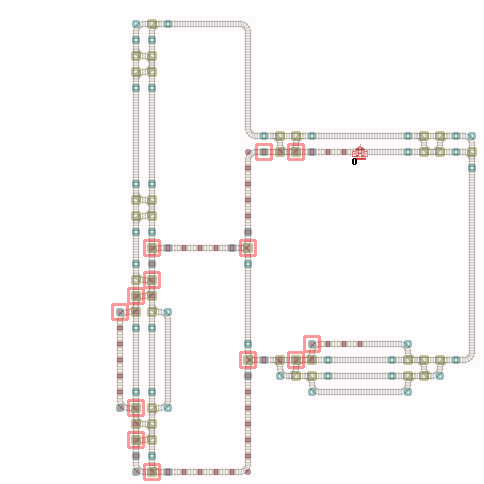

src/images/adrian_egli_start.png

0 → 100644

27.4 KiB

src/images/adrian_egli_target.png

0 → 100644

30.5 KiB