diff --git a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/.flake8 b/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/.flake8

deleted file mode 100644

index 3be5b6be4c7dc036e223db12aa880e0cee0ef5ce..0000000000000000000000000000000000000000

--- a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/.flake8

+++ /dev/null

@@ -1,13 +0,0 @@

-[flake8]

-max-line-length = 119

-exclude = docs/source,*.egg,build

-select = E,W,F

-verbose = 2

-# https://pep8.readthedocs.io/en/latest/intro.html#error-codes

-format = pylint

-ignore =

- E731 # E731 - Do not assign a lambda expression, use a def

- W605 # W605 - invalid escape sequence '\_'. Needed for docs

- W504 # W504 - line break after binary operator

- W503 # W503 - line break before binary operator, need for black

- E203 # E203 - whitespace before ':'. Opposite convention enforced by black

\ No newline at end of file

diff --git a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/.github/ISSUE_TEMPLATE/bug-report.md b/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/.github/ISSUE_TEMPLATE/bug-report.md

deleted file mode 100644

index 51af8d6f76ffd5519a6f3c09aae1d872546bf1b4..0000000000000000000000000000000000000000

--- a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/.github/ISSUE_TEMPLATE/bug-report.md

+++ /dev/null

@@ -1,48 +0,0 @@

----

-name: "\U0001F41B Bug Report"

-about: Submit a bug report to help to improve Open-Unmix

-

----

-

-## 🛠Bug

-

-<!-- A clear and concise description of what the bug is. -->

-

-## To Reproduce

-

-Steps to reproduce the behavior:

-

-1.

-1.

-1.

-

-<!-- If you have a code sample, error messages, stack traces, please provide it here as well -->

-

-## Expected behavior

-

-<!-- A clear and concise description of what you expected to happen. -->

-

-## Environment

-

-Please add some information about your environment

-

- - PyTorch Version (e.g., 1.2):

- - OS (e.g., Linux):

- - torchaudio loader (y/n):

- - Python version:

- - CUDA/cuDNN version:

- - Any other relevant information:

-

-If unsure you can paste the output from the [pytorch environment collection script](https://raw.githubusercontent.com/pytorch/pytorch/master/torch/utils/collect_env.py)

-(or fill out the checklist below manually).

-

-You can get that script and run it with:

-```

-wget https://raw.githubusercontent.com/pytorch/pytorch/master/torch/utils/collect_env.py

-# For security purposes, please check the contents of collect_env.py before running it.

-python collect_env.py

-```

-

-## Additional context

-

-<!-- Add any other context about the problem here. -->

diff --git a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/.github/ISSUE_TEMPLATE/improved-model.md b/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/.github/ISSUE_TEMPLATE/improved-model.md

deleted file mode 100644

index e708c6d8f61fb777d0706cff239047b25e62086b..0000000000000000000000000000000000000000

--- a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/.github/ISSUE_TEMPLATE/improved-model.md

+++ /dev/null

@@ -1,19 +0,0 @@

----

-name: "\U0001F680Improved Model"

-about: Submit a proposal for an improved separation model

-

----

-

-## 🚀 Model Improvement

-<!-- A clear and concise description of the added model improvement

-

-Example: we changed the ReLU activation to XeLU since it was shown in [1] that this reduces overfitting

--->

-

-## Motivation

-

-<!-- Please outline the motivation for the model improvement. Is your proposal related to a problem? -->

-

-## Objective Evaluation

-

-<!-- A table with the median of median BSSEval result on computed on the MUSDB18 test set -->

\ No newline at end of file

diff --git a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/.github/PULL_REQUEST_TEMPLATE.md b/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/.github/PULL_REQUEST_TEMPLATE.md

deleted file mode 100644

index 3f89d7d90fd0914039f95abd7a2c542e6a7bb669..0000000000000000000000000000000000000000

--- a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/.github/PULL_REQUEST_TEMPLATE.md

+++ /dev/null

@@ -1,12 +0,0 @@

-<!--

-Thanks for contributing a pull request! Please ensure you have taken a look at

-the contribution guidelines: https://github.com/sigsep/open-unmix-pytorch/blob/master/CONTRIBUTING.md

-->

-

-#### Reference Issue

-<!-- Example: Fixes #123 -->

-

-#### What does this implement/fix? Explain your changes.

-

-

-#### Any other comments?

diff --git a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/.github/workflows/test_black.yml b/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/.github/workflows/test_black.yml

deleted file mode 100644

index 4fba942163fad8bbc60c50ddc991d7671f04a1c5..0000000000000000000000000000000000000000

--- a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/.github/workflows/test_black.yml

+++ /dev/null

@@ -1,25 +0,0 @@

-name: Linter

-on: [push, pull_request]

-

-jobs:

- code-black:

- name: CI

- runs-on: ubuntu-latest

- steps:

- - name: Checkout

- uses: actions/checkout@v2

- - name: Set up Python 3.7

- uses: actions/setup-python@v2

- with:

- python-version: 3.7

-

- - name: Install Black and flake8

- run: pip install black==22.3.0 flake8

- - name: Run Black

- run: python -m black --config=pyproject.toml --check openunmix tests scripts

-

- - name: Lint with flake8

- # Exit on important linting errors and warn about others.

- run: |

- python -m flake8 openunmix tests --show-source --statistics --select=F6,F7,F82,F52

- python -m flake8 --config .flake8 --exit-zero openunmix tests --statistics

diff --git a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/.github/workflows/test_cli.yml b/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/.github/workflows/test_cli.yml

deleted file mode 100644

index 869c4979b48804c3546bc8bc974bb482561e3163..0000000000000000000000000000000000000000

--- a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/.github/workflows/test_cli.yml

+++ /dev/null

@@ -1,37 +0,0 @@

-name: UMX

-# thanks for @mpariente for copying this workflow

-# see: https://help.github.com/en/actions/reference/events-that-trigger-workflows

-# Trigger the workflow on push or pull request

-on: [push, pull_request]

-

-jobs:

- src-test:

- name: separation test

- runs-on: ubuntu-latest

- strategy:

- matrix:

- python-version: [3.7, 3.8, 3.9]

-

- # Timeout: https://stackoverflow.com/a/59076067/4521646

- timeout-minutes: 10

- steps:

- - uses: actions/checkout@v2

- - name: Set up Python ${{ matrix.python-version }}

- uses: actions/setup-python@v2

- with:

- python-version: ${{ matrix.python-version }}

- - name: Install libnsdfile, ffmpeg and sox

- run: |

- sudo apt update

- sudo apt install libsndfile1-dev libsndfile1 ffmpeg sox

- - name: Install package dependencies

- run: |

- python -m pip install --upgrade --user pip --quiet

- python -m pip install .["stempeg"]

- python --version

- pip --version

- python -m pip list

-

- - name: CLI tests

- run: |

- umx https://samples.ffmpeg.org/A-codecs/wavpcm/test-96.wav --audio-backend stempeg

diff --git a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/.github/workflows/test_conda.yml b/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/.github/workflows/test_conda.yml

deleted file mode 100644

index f747f6821981a68bbaa31bc8e90b9f0d38cc3b7f..0000000000000000000000000000000000000000

--- a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/.github/workflows/test_conda.yml

+++ /dev/null

@@ -1,42 +0,0 @@

-name: CI

-# thanks for @mpariente for copying this workflow

-# see: https://help.github.com/en/actions/reference/events-that-trigger-workflows

-# Trigger the workflow on push or pull request

-on: [push, pull_request]

-

-jobs:

- src-test:

- name: conda-tests

- runs-on: ubuntu-latest

-

- # Timeout: https://stackoverflow.com/a/59076067/4521646

- timeout-minutes: 10

- defaults:

- run:

- shell: bash -l {0}

- steps:

- - uses: actions/checkout@v2

- - name: Cache conda

- uses: actions/cache@v2

- with:

- path: ~/conda_pkgs_dir

- key: conda-${{ hashFiles('environment-ci.yml') }}

- - name: Setup Miniconda

- uses: conda-incubator/setup-miniconda@v2

- with:

- activate-environment: umx-cpu

- environment-file: scripts/environment-cpu-linux.yml

- auto-update-conda: true

- auto-activate-base: false

- python-version: 3.7

- - name: Install dependencies

- run: |

- python -m pip install -e .['tests']

- python --version

- pip --version

- python -m pip list

- - name: Conda list

- run: conda list

- - name: Run model test

- run: |

- py.test tests/test_model.py -v

\ No newline at end of file

diff --git a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/.github/workflows/test_unittests.yml b/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/.github/workflows/test_unittests.yml

deleted file mode 100644

index 3542d57ab37a19237ed138d0b1b823352708bad7..0000000000000000000000000000000000000000

--- a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/.github/workflows/test_unittests.yml

+++ /dev/null

@@ -1,71 +0,0 @@

-name: CI

-# thanks for @mpariente for copying this workflow

-# see: https://help.github.com/en/actions/reference/events-that-trigger-workflows

-# Trigger the workflow on push or pull request

-on: [push, pull_request]

-

-jobs:

- src-test:

- name: unit-tests

- runs-on: ubuntu-latest

- strategy:

- matrix:

- python-version: [3.7]

- pytorch-version: ["1.9.0"]

-

- # Timeout: https://stackoverflow.com/a/59076067/4521646

- timeout-minutes: 10

- steps:

- - uses: actions/checkout@v2

- - name: Set up Python ${{ matrix.python-version }}

- uses: actions/setup-python@v2

- with:

- python-version: ${{ matrix.python-version }}

- - name: Install libnsdfile, ffmpeg and sox

- run: |

- sudo apt update

- sudo apt install libsndfile1-dev libsndfile1 ffmpeg sox

- - name: Install python dependencies

- env:

- TORCH_INSTALL: ${{ matrix.pytorch-version }}

- run: |

- python -m pip install --upgrade --user pip --quiet

- python -m pip install numpy Cython --upgrade-strategy only-if-needed --quiet

- python -m pip install coverage codecov --upgrade-strategy only-if-needed --quiet

- if [ $TORCH_INSTALL == "1.8.0" ]; then

- INSTALL="torch==1.8.0+cpu torchaudio==0.8.0 -f https://download.pytorch.org/whl/torch_stable.html"

- elif [ $TORCH_INSTALL == "1.9.0" ]; then

- INSTALL="torch==1.9.0+cpu torchaudio==0.9.0 -f https://download.pytorch.org/whl/torch_stable.html"

- else

- INSTALL="--pre torch torchaudio -f https://download.pytorch.org/whl/nightly/cpu/torch_nightly.html"

- fi

- python -m pip install $INSTALL

- python -m pip install -e .['tests']

- python --version

- pip --version

- python -m pip list

- - name: Create dummy dataset

- run: |

- chmod +x tests/create_dummy_datasets.sh

- ./tests/create_dummy_datasets.sh

- shell: bash

-

- - name: Source code tests

- run: |

- coverage run -a -m py.test tests

- # chmod +x ./tests/cli_test.sh

- # ./tests/cli_test.sh

-

- - name: CLI tests

- run: |

- chmod +x ./tests/cli_test.sh

- ./tests/cli_test.sh

-

- - name: Coverage report

- run: |

- coverage report -m

- coverage xml -o coverage.xml

- - name: Codecov upload

- uses: codecov/codecov-action@v1

- with:

- file: ./coverage.xml

\ No newline at end of file

diff --git a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/.gitignore b/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/.gitignore

deleted file mode 100644

index e5a08ce698b776e2648dc4226293343fba2dbbeb..0000000000000000000000000000000000000000

--- a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/.gitignore

+++ /dev/null

@@ -1,99 +0,0 @@

-#### joe made this: http://goel.io/joe

-

-data/

-OSU/

-.mypy_cache

-.vscode

-*.json

-*.wav

-*.mp3

-*.pth.tar

-env*/

-

-#####=== Python ===#####

-

-# Byte-compiled / optimized / DLL files

-__pycache__/

-*.py[cod]

-*$py.class

-

-# C extensions

-*.so

-

-# Distribution / packaging

-.Python

-env/

-build/

-develop-eggs/

-dist/

-downloads/

-eggs/

-.eggs/

-lib/

-lib64/

-parts/

-sdist/

-var/

-*.egg-info/

-.installed.cfg

-*.egg

-

-# PyInstaller

-# Usually these files are written by a python script from a template

-# before PyInstaller builds the exe, so as to inject date/other infos into it.

-*.manifest

-*.spec

-

-# Installer logs

-pip-log.txt

-pip-delete-this-directory.txt

-

-# Unit test / coverage reports

-htmlcov/

-.tox/

-.coverage

-.coverage.*

-.cache

-nosetests.xml

-coverage.xml

-*,cover

-

-# Translations

-*.mo

-*.pot

-

-# Django stuff:

-*.log

-

-# Sphinx documentation

-docs/_build/

-

-# PyBuilder

-target/

-

-#####=== OSX ===#####

-.DS_Store

-.AppleDouble

-.LSOverride

-

-# Icon must end with two \r

-Icon

-

-

-# Thumbnails

-._*

-

-# Files that might appear in the root of a volume

-.DocumentRevisions-V100

-.fseventsd

-.Spotlight-V100

-.TemporaryItems

-.Trashes

-.VolumeIcon.icns

-

-# Directories potentially created on remote AFP share

-.AppleDB

-.AppleDesktop

-Network Trash Folder

-Temporary Items

-.apdisk

diff --git a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/.nojekyll b/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/.nojekyll

deleted file mode 100644

index e69de29bb2d1d6434b8b29ae775ad8c2e48c5391..0000000000000000000000000000000000000000

diff --git a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/CONTRIBUTING.md b/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/CONTRIBUTING.md

deleted file mode 100644

index f3d96b6c1540b4c7fdb3f3ed019ae721edaffb6d..0000000000000000000000000000000000000000

--- a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/CONTRIBUTING.md

+++ /dev/null

@@ -1,137 +0,0 @@

-# Contributing

-

-Open-Unmix is designed as scientific software. Therefore, we encourage the community to submit bug-fixes and comments and improve the __computational performance__, __reproducibility__ and the __readability__ of the code where possible. When contributing to this repository, please first discuss the change you wish to make in the issue tracker with the owners of this repository before making a change.

-

-We are not looking for contributions that only focus on improving the __separation performance__. However, if this is case, we, instead, encourage researchers to

-

-1. Use Open-Unmix for their own research, e.g. by modification of the model.

-2. Publish and present the results in a scientific paper / conference and __cite open-unmix__.

-3. Contact us via mail or open a [performance issue]() if you are interested to contribute the new model.

- In this case we will rerun the training on our internal cluster and update the pre-trained weights accordingly.

-

-Please note we have a code of conduct, please follow it in all your interactions with the project.

-

-## Pull Request Process

-

-The preferred way to contribute to open-unmix is to fork the

-[main repository](http://github.com/sigsep/open-unmix-pytorch/) on

-GitHub:

-

-1. Fork the [project repository](http://github.com/sigsep/open-unmix-pytorch):

- click on the 'Fork' button near the top of the page. This creates

- a copy of the code under your account on the GitHub server.

-

-2. Clone this copy to your local disk:

-

-```

-$ git clone git@github.com:YourLogin/open-unmix-pytorch.git

-$ cd open-unmix-pytorch

-```

-

-3. Create a branch to hold your changes:

-

-```

-$ git checkout -b my-feature

-```

-

- and start making changes. Never work in the ``master`` branch!

-

-4. Ensure any install or build artifacts are removed before making the pull request.

-

-5. Update the README.md and/or the appropriate document in the `/docs` folder with details of changes to the interface, this includes new command line arguments, dataset description or command line examples.

-

-6. Work on this copy on your computer using Git to do the version

- control. When you're done editing, do:

-

-```

-$ git add modified_files

-$ git commit

-```

-

- to record your changes in Git, then push them to GitHub with:

-

-```

-$ git push -u origin my-feature

-```

-

-Finally, go to the web page of your fork of the open-unmix repo,

-and click 'Pull request' to send your changes to the maintainers for

-review. This will send an email to the committers.

-

-(If any of the above seems like magic to you, then look up the

-[Git documentation](http://git-scm.com/documentation) on the web.)

-

-## Code of Conduct

-

-### Our Pledge

-

-In the interest of fostering an open and welcoming environment, we as

-contributors and maintainers pledge to making participation in our project and

-our community a harassment-free experience for everyone, regardless of age, body

-size, disability, ethnicity, gender identity and expression, level of experience,

-nationality, personal appearance, race, religion, or sexual identity and

-orientation.

-

-### Our Standards

-

-Examples of behavior that contributes to creating a positive environment

-include:

-

-* Using welcoming and inclusive language

-* Being respectful of differing viewpoints and experiences

-* Gracefully accepting constructive criticism

-* Focusing on what is best for the community

-* Showing empathy towards other community members

-

-Examples of unacceptable behavior by participants include:

-

-* The use of sexualized language or imagery and unwelcome sexual attention or

-advances

-* Trolling, insulting/derogatory comments, and personal or political attacks

-* Public or private harassment

-* Publishing others' private information, such as a physical or electronic

- address, without explicit permission

-* Other conduct which could reasonably be considered inappropriate in a

- professional setting

-

-### Our Responsibilities

-

-Project maintainers are responsible for clarifying the standards of acceptable

-behavior and are expected to take appropriate and fair corrective action in

-response to any instances of unacceptable behavior.

-

-Project maintainers have the right and responsibility to remove, edit, or

-reject comments, commits, code, wiki edits, issues, and other contributions

-that are not aligned to this Code of Conduct, or to ban temporarily or

-permanently any contributor for other behaviors that they deem inappropriate,

-threatening, offensive, or harmful.

-

-### Scope

-

-This Code of Conduct applies both within project spaces and in public spaces

-when an individual is representing the project or its community. Examples of

-representing a project or community include using an official project e-mail

-address, posting via an official social media account, or acting as an appointed

-representative at an online or offline event. Representation of a project may be

-further defined and clarified by project maintainers.

-

-### Enforcement

-

-Instances of abusive, harassing, or otherwise unacceptable behavior may be

-reported by contacting the project team @aliutkus, @faroit. All

-complaints will be reviewed and investigated and will result in a response that

-is deemed necessary and appropriate to the circumstances. The project team is

-obligated to maintain confidentiality with regard to the reporter of an incident.

-Further details of specific enforcement policies may be posted separately.

-

-Project maintainers who do not follow or enforce the Code of Conduct in good

-faith may face temporary or permanent repercussions as determined by other

-members of the project's leadership.

-

-### Attribution

-

-This Code of Conduct is adapted from the [Contributor Covenant][homepage], version 1.4,

-available at [http://contributor-covenant.org/version/1/4][version]

-

-[homepage]: http://contributor-covenant.org

-[version]: http://contributor-covenant.org/version/1/4/

\ No newline at end of file

diff --git a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/Dockerfile b/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/Dockerfile

deleted file mode 100644

index c09205d59873ae28b8a8862f00a86ce57ab2e27b..0000000000000000000000000000000000000000

--- a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/Dockerfile

+++ /dev/null

@@ -1,15 +0,0 @@

-FROM pytorch/pytorch:1.7.1-cuda11.0-cudnn8-runtime

-

-RUN apt-get update && apt-get install -y --no-install-recommends \

- libsox-fmt-all \

- sox \

- libsox-dev

-

-WORKDIR /workspace

-

-RUN conda install ffmpeg -c conda-forge

-RUN pip install musdb>=0.4.0

-

-RUN pip install openunmix['stempeg']

-

-ENTRYPOINT ["umx"]

\ No newline at end of file

diff --git a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/LICENSE b/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/LICENSE

deleted file mode 100644

index 4267b01da7a50980c2ce7393245000e283d3ab7d..0000000000000000000000000000000000000000

--- a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/LICENSE

+++ /dev/null

@@ -1,21 +0,0 @@

-MIT License

-

-Copyright (c) 2019 Inria (Fabian-Robert Stöter, Antoine Liutkus)

-

-Permission is hereby granted, free of charge, to any person obtaining a copy

-of this software and associated documentation files (the "Software"), to deal

-in the Software without restriction, including without limitation the rights

-to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

-copies of the Software, and to permit persons to whom the Software is

-furnished to do so, subject to the following conditions:

-

-The above copyright notice and this permission notice shall be included in all

-copies or substantial portions of the Software.

-

-THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

-IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

-FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

-AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

-LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

-OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

-SOFTWARE.

diff --git a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/README.md b/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/README.md

deleted file mode 100644

index ed10a838de651943f6c20e6254c771bf357abc9f..0000000000000000000000000000000000000000

--- a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/README.md

+++ /dev/null

@@ -1,291 +0,0 @@

-# _Open-Unmix_ for PyTorch

-

-[](https://joss.theoj.org/papers/571753bc54c5d6dd36382c3d801de41d)

-[](https://colab.research.google.com/drive/1mijF0zGWxN-KaxTnd0q6hayAlrID5fEQ)

-[](https://paperswithcode.com/sota/music-source-separation-on-musdb18?p=open-unmix-a-reference-implementation-for)

-

-[](https://github.com/sigsep/open-unmix-pytorch/actions?query=workflow%3ACI+branch%3Amaster+event%3Apush)

-[](https://pypi.python.org/pypi/openunmix)

-[](https://pypi.python.org/pypi/openunmix)

-

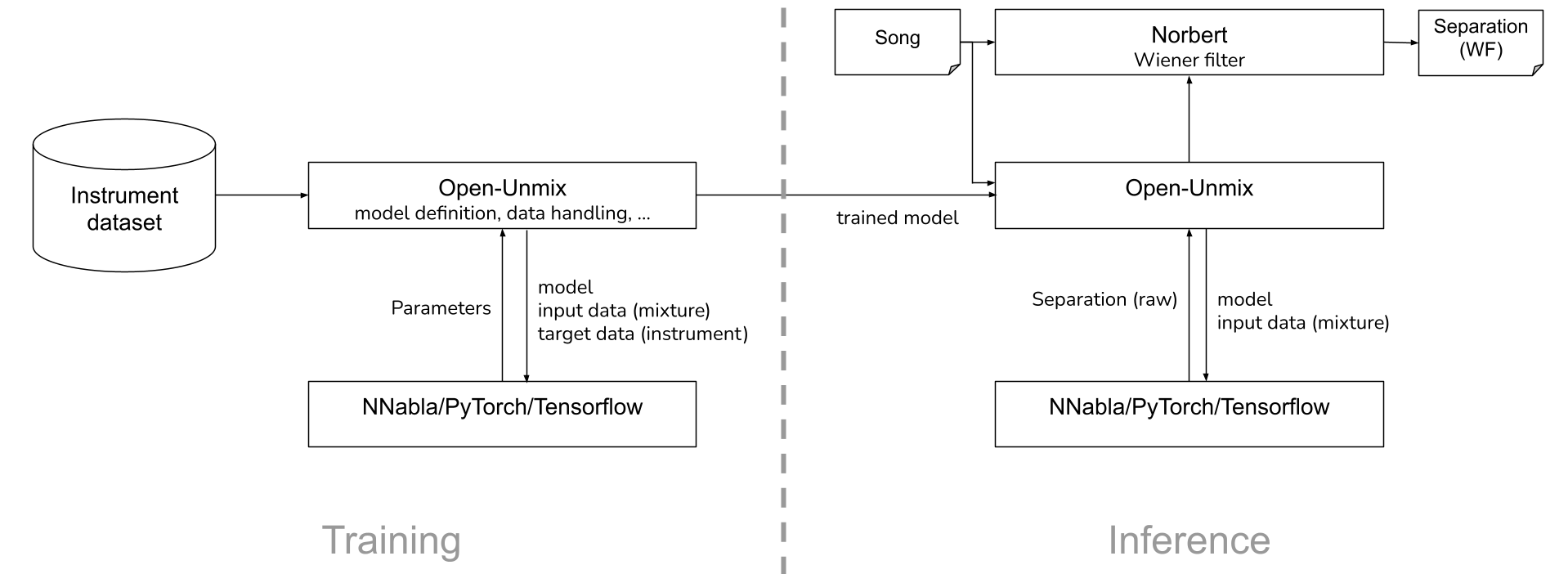

-This repository contains the PyTorch (1.8+) implementation of __Open-Unmix__, a deep neural network reference implementation for music source separation, applicable for researchers, audio engineers and artists. __Open-Unmix__ provides ready-to-use models that allow users to separate pop music into four stems: __vocals__, __drums__, __bass__ and the remaining __other__ instruments. The models were pre-trained on the freely available [MUSDB18](https://sigsep.github.io/datasets/musdb.html) dataset. See details at [apply pre-trained model](#getting-started).

-

-## âï¸ News

-

-- 03/07/2021: We added `umxl`, a model that was trained on extra data which significantly improves the performance, especially generalization.

-- 14/02/2021: We released the new version of open-unmix as a python package. This comes with: a fully differentiable version of [norbert](https://github.com/sigsep/norbert), improved audio loading pipeline and large number of bug fixes. See [release notes](https://github.com/sigsep/open-unmix-pytorch/releases/) for further info.

-

-- 06/05/2020: We added a pre-trained speech enhancement model `umxse` provided by Sony.

-

-- 13/03/2020: Open-unmix was awarded 2nd place in the [PyTorch Global Summer Hackathon 2020](https://devpost.com/software/open-unmix).

-

-__Related Projects:__ open-unmix-pytorch | [open-unmix-nnabla](https://github.com/sigsep/open-unmix-nnabla) | [musdb](https://github.com/sigsep/sigsep-mus-db) | [museval](https://github.com/sigsep/sigsep-mus-eval) | [norbert](https://github.com/sigsep/norbert)

-

-## 🧠The Model (for one source)

-

-

-

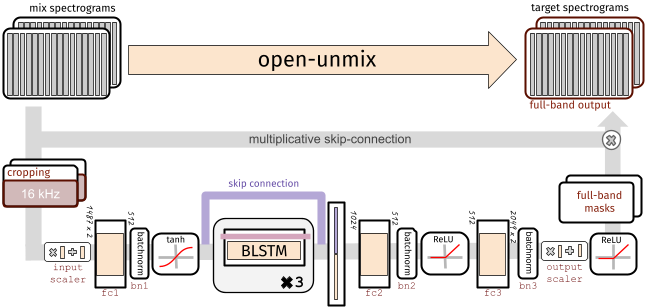

-To perform separation into multiple sources, _Open-unmix_ comprises multiple models that are trained for each particular target. While this makes the training less comfortable, it allows great flexibility to customize the training data for each target source.

-

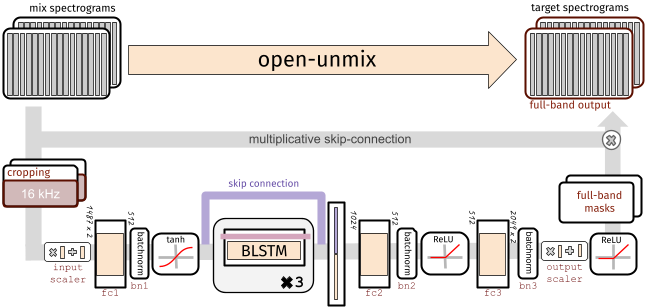

-Each _Open-Unmix_ source model is based on a three-layer bidirectional deep LSTM. The model learns to predict the magnitude spectrogram of a target source, like _vocals_, from the magnitude spectrogram of a mixture input. Internally, the prediction is obtained by applying a mask on the input. The model is optimized in the magnitude domain using mean squared error.

-

-### Input Stage

-

-__Open-Unmix__ operates in the time-frequency domain to perform its prediction. The input of the model is either:

-

-* __`models.Separator`:__ A time domain signal tensor of shape `(nb_samples, nb_channels, nb_timesteps)`, where `nb_samples` are the samples in a batch, `nb_channels` is 1 or 2 for mono or stereo audio, respectively, and `nb_timesteps` is the number of audio samples in the recording. In this case, the model computes STFTs with either `torch` or `asteroid_filteranks` on the fly.

-

-* __`models.OpenUnmix`:__ The core open-unmix takes **magnitude spectrograms** directly (e.g. when pre-computed and loaded from disk). In that case, the input is of shape `(nb_frames, nb_samples, nb_channels, nb_bins)`, where `nb_frames` and `nb_bins` are the time and frequency-dimensions of a Short-Time-Fourier-Transform.

-

-The input spectrogram is _standardized_ using the global mean and standard deviation for every frequency bin across all frames. Furthermore, we apply batch normalization in multiple stages of the model to make the training more robust against gain variation.

-

-### Dimensionality reduction

-

-The LSTM is not operating on the original input spectrogram resolution. Instead, in the first step after the normalization, the network learns to compresses the frequency and channel axis of the model to reduce redundancy and make the model converge faster.

-

-### Bidirectional-LSTM

-

-The core of __open-unmix__ is a three layer bidirectional [LSTM network](https://dl.acm.org/citation.cfm?id=1246450). Due to its recurrent nature, the model can be trained and evaluated on arbitrary length of audio signals. Since the model takes information from past and future simultaneously, the model cannot be used in an online/real-time manner.

-An uni-directional model can easily be trained as described [here](docs/training.md).

-

-### Output Stage

-

-After applying the LSTM, the signal is decoded back to its original input dimensionality. In the last steps the output is multiplied with the input magnitude spectrogram, so that the models is asked to learn a mask.

-

-## 🤹â€â™€ï¸ Putting source models together: the `Separator`

-

-`models.Separator` puts together _Open-unmix_ spectrogram model for each desired target, and combines their output through a multichannel generalized Wiener filter, before application of inverse STFTs using `torchaudio`.

-The filtering is differentiable (but parameter-free) version of [norbert](https://github.com/sigsep/norbert). The separator is currently currently only used during inference.

-

-## ðŸ Getting started

-

-### Installation

-

-`openunmix` can be installed from pypi using:

-

-```

-pip install openunmix

-```

-

-Note, that the pypi version of openunmix uses [torchaudio] to load and save audio files. To increase the number of supported input and output file formats (such as STEMS export), please additionally install [stempeg](https://github.com/faroit/stempeg).

-

-Training is not part of the open-unmix package, please follow [docs/train.md] for more information.

-

-#### Using Docker

-

-We also provide a docker container. Performing separation of a local track in `~/Music/track1.wav` can be performed in a single line:

-

-```

-docker run -v ~/Music/:/data -it faroit/open-unmix-pytorch "/data/track1.wav" --outdir /data/track1

-```

-

-### Pre-trained models

-

-We provide three core pre-trained music separation models. All three models are end-to-end models that take waveform inputs and output the separated waveforms.

-

-* __`umxl` (default)__ trained on private stems dataset of compressed stems. __Note, that the weights are only licensed for non-commercial use (CC BY-NC-SA 4.0).__

-

- [](https://doi.org/10.5281/zenodo.5069601)

-

-* __`umxhq`__ trained on [MUSDB18-HQ](https://sigsep.github.io/datasets/musdb.html#uncompressed-wav) which comprises the same tracks as in MUSDB18 but un-compressed which yield in a full bandwidth of 22050 Hz.

-

- [](https://doi.org/10.5281/zenodo.3370489)

-

-* __`umx`__ is trained on the regular [MUSDB18](https://sigsep.github.io/datasets/musdb.html#compressed-stems) which is bandwidth limited to 16 kHz do to AAC compression. This model should be used for comparison with other (older) methods for evaluation in [SiSEC18](sisec18.unmix.app).

-

- [](https://doi.org/10.5281/zenodo.3370486)

-

-Furthermore, we provide a model for speech enhancement trained by [Sony Corporation](link)

-

-* __`umxse`__ speech enhancement model is trained on the 28-speaker version of the [Voicebank+DEMAND corpus](https://datashare.is.ed.ac.uk/handle/10283/1942?show=full).

-

- [](https://doi.org/10.5281/zenodo.3786908)

-

-All four models are also available as spectrogram (core) models, which take magnitude spectrogram inputs and ouput separated spectrograms.

-These models can be loaded using `umxl_spec`, `umxhq_spec`, `umx_spec` and `umxse_spec`.

-

-To separate audio files (`wav`, `flac`, `ogg` - but not `mp3`) files just run:

-

-```bash

-umx input_file.wav

-```

-

-A more detailed list of the parameters used for the separation is given in the [inference.md](/docs/inference.md) document.

-

-We provide a [jupyter notebook on google colab](https://colab.research.google.com/drive/1mijF0zGWxN-KaxTnd0q6hayAlrID5fEQ) to experiment with open-unmix and to separate files online without any installation setup.

-

-### Using pre-trained models from within python

-

-We implementes several ways to load pre-trained models and use them from within your python projects:

-#### When the package is installed

-

-Loading a pre-trained models is as simple as loading

-

-```python

-separator = openunmix.umxl(...)

-```

-#### torch.hub

-

-We also provide a torch.hub compatible modules that can be loaded. Note that this does _not_ even require to install the open-unmix packagen and should generally work when the pytorch version is the same.

-

-```python

-separator = torch.hub.load('sigsep/open-unmix-pytorch', 'umxl, device=device)

-```

-

-Where, `umxl` specifies the pre-trained model.

-#### Performing separation

-

-With a created separator object, one can perform separation of some `audio` (torch.Tensor of shape `(channels, length)`, provided as at a sampling rate `separator.sample_rate`) through:

-

-```python

-estimates = separator(audio, ...)

-# returns estimates as tensor

-```

-

-Note that this requires the audio to be in the right shape and sampling rate. For convenience we provide a pre-processing in `openunmix.utils.preprocess(..`)` that takes numpy audio and converts it to be used for open-unmix.

-

-#### One-liner

-

-To perform model loading, preprocessing and separation in one step, just use:

-

-```python

-from openunmix import separate

-estimates = separate.predict(audio, ...)

-```

-

-### Load user-trained models

-

-When a path instead of a model-name is provided to `--model`, pre-trained `Separator` will be loaded from disk.

-E.g. The following files are assumed to present when loading `--model mymodel --targets vocals`

-

-* `mymodel/separator.json`

-* `mymodel/vocals.pth`

-* `mymodel/vocals.json`

-

-

-Note that the separator usually joins multiple models for each target and performs separation using all models. E.g. if the separator contains `vocals` and `drums` models, two output files are generated, unless the `--residual` option is selected, in which case an additional source will be produced, containing an estimate of all that is not the targets in the mixtures.

-

-### Evaluation using `museval`

-

-To perform evaluation in comparison to other SISEC systems, you would need to install the `museval` package using

-

-```

-pip install museval

-```

-

-and then run the evaluation using

-

-`python -m openunmix.evaluate --outdir /path/to/musdb/estimates --evaldir /path/to/museval/results`

-

-### Results compared to SiSEC 2018 (SDR/Vocals)

-

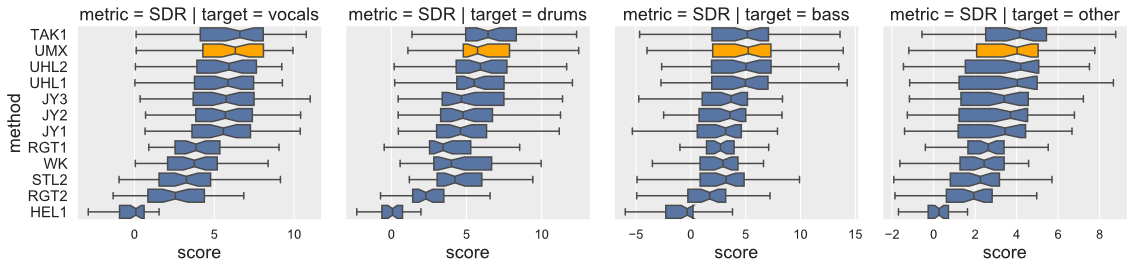

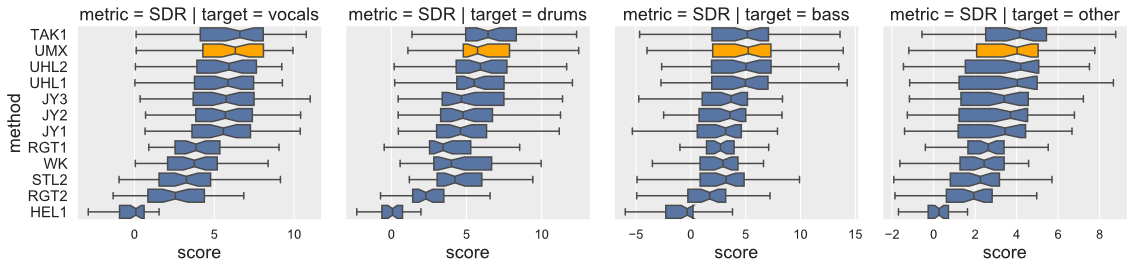

-Open-Unmix yields state-of-the-art results compared to participants from [SiSEC 2018](https://sisec18.unmix.app/#/methods). The performance of `UMXHQ` and `UMX` is almost identical since it was evaluated on compressed STEMS.

-

-

-

-Note that

-

-1. [`STL1`, `TAK2`, `TAK3`, `TAU1`, `UHL3`, `UMXHQ`] were omitted as they were _not_ trained on only _MUSDB18_.

-2. [`HEL1`, `TAK1`, `UHL1`, `UHL2`] are not open-source.

-

-#### Scores (Median of frames, Median of tracks)

-

-|target|SDR | SDR | SDR |

-|------|-----|-----|-----|

-|`model`|UMX |UMXHQ|UMXL |

-|vocals|6.32 | 6.25|__7.21__ |

-|bass |5.23 | 5.07|__6.02__ |

-|drums |5.73 | 6.04|__7.15__ |

-|other |4.02 | 4.28|__4.89__ |

-

-## Training

-

-Details on the training is provided in a separate document [here](docs/training.md).

-

-## Extensions

-

-Details on how _open-unmix_ can be extended or improved for future research on music separation is described in a separate document [here](docs/extensions.md).

-

-

-## Design Choices

-

-we favored simplicity over performance to promote clearness of the code. The rationale is to have __open-unmix__ serve as a __baseline__ for future research while performance still meets current state-of-the-art (See [Evaluation](#Evaluation)). The results are comparable/better to those of `UHL1`/`UHL2` which obtained the best performance over all systems trained on MUSDB18 in the [SiSEC 2018 Evaluation campaign](https://sisec18.unmix.app).

-We designed the code to allow researchers to reproduce existing results, quickly develop new architectures and add own user data for training and testing. We favored framework specifics implementations instead of having a monolithic repository with common code for all frameworks.

-

-## How to contribute

-

-_open-unmix_ is a community focused project, we therefore encourage the community to submit bug-fixes and requests for technical support through [github issues](https://github.com/sigsep/open-unmix-pytorch/issues/new/choose). For more details of how to contribute, please follow our [`CONTRIBUTING.md`](CONTRIBUTING.md). For help and support, please use the gitter chat or the google groups forums.

-

-### Authors

-

-[Fabian-Robert Stöter](https://www.faroit.com/), [Antoine Liutkus](https://github.com/aliutkus), Inria and LIRMM, Montpellier, France

-

-## References

-

-<details><summary>If you use open-unmix for your research – Cite Open-Unmix</summary>

-

-```latex

-@article{stoter19,

- author={F.-R. St\\"oter and S. Uhlich and A. Liutkus and Y. Mitsufuji},

- title={Open-Unmix - A Reference Implementation for Music Source Separation},

- journal={Journal of Open Source Software},

- year=2019,

- doi = {10.21105/joss.01667},

- url = {https://doi.org/10.21105/joss.01667}

-}

-```

-

-</p>

-</details>

-

-<details><summary>If you use the MUSDB dataset for your research - Cite the MUSDB18 Dataset</summary>

-<p>

-

-```latex

-@misc{MUSDB18,

- author = {Rafii, Zafar and

- Liutkus, Antoine and

- Fabian-Robert St{\"o}ter and

- Mimilakis, Stylianos Ioannis and

- Bittner, Rachel},

- title = {The {MUSDB18} corpus for music separation},

- month = dec,

- year = 2017,

- doi = {10.5281/zenodo.1117372},

- url = {https://doi.org/10.5281/zenodo.1117372}

-}

-```

-

-</p>

-</details>

-

-

-<details><summary>If compare your results with SiSEC 2018 Participants - Cite the SiSEC 2018 LVA/ICA Paper</summary>

-<p>

-

-```latex

-@inproceedings{SiSEC18,

- author="St{\"o}ter, Fabian-Robert and Liutkus, Antoine and Ito, Nobutaka",

- title="The 2018 Signal Separation Evaluation Campaign",

- booktitle="Latent Variable Analysis and Signal Separation:

- 14th International Conference, LVA/ICA 2018, Surrey, UK",

- year="2018",

- pages="293--305"

-}

-```

-

-</p>

-</details>

-

-âš ï¸ Please note that the official acronym for _open-unmix_ is **UMX**.

-

-### License

-

-MIT

-

-### Acknowledgements

-

-<p align="center">

- <img src="https://raw.githubusercontent.com/sigsep/website/master/content/open-unmix/logo_INRIA.svg?sanitize=true" width="200" title="inria">

- <img src="https://raw.githubusercontent.com/sigsep/website/master/content/open-unmix/anr.jpg" width="100" alt="anr">

-</p>

diff --git a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/codecov.yml b/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/codecov.yml

deleted file mode 100644

index 482585a984003a0c344a5e60ffb88aeb7fc496d6..0000000000000000000000000000000000000000

--- a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/codecov.yml

+++ /dev/null

@@ -1,2 +0,0 @@

-codecov:

- require_ci_to_pass: no

diff --git a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/docs/cli.html b/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/docs/cli.html

deleted file mode 100644

index b66e74dadad7c64d987c643795dd94f8d89682f1..0000000000000000000000000000000000000000

--- a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/docs/cli.html

+++ /dev/null

@@ -1,466 +0,0 @@

-<!doctype html>

-<html lang="en">

-<head>

-<meta charset="utf-8">

-<meta name="viewport" content="width=device-width, initial-scale=1, minimum-scale=1" />

-<meta name="generator" content="pdoc 0.9.2" />

-<title>openunmix.cli API documentation</title>

-<meta name="description" content="" />

-<link rel="preload stylesheet" as="style" href="https://cdnjs.cloudflare.com/ajax/libs/10up-sanitize.css/11.0.1/sanitize.min.css" integrity="sha256-PK9q560IAAa6WVRRh76LtCaI8pjTJ2z11v0miyNNjrs=" crossorigin>

-<link rel="preload stylesheet" as="style" href="https://cdnjs.cloudflare.com/ajax/libs/10up-sanitize.css/11.0.1/typography.min.css" integrity="sha256-7l/o7C8jubJiy74VsKTidCy1yBkRtiUGbVkYBylBqUg=" crossorigin>

-<link rel="stylesheet preload" as="style" href="https://cdnjs.cloudflare.com/ajax/libs/highlight.js/10.1.1/styles/github.min.css" crossorigin>

-<style>:root{--highlight-color:#fe9}.flex{display:flex !important}body{line-height:1.5em}#content{padding:20px}#sidebar{padding:30px;overflow:hidden}#sidebar > *:last-child{margin-bottom:2cm}.http-server-breadcrumbs{font-size:130%;margin:0 0 15px 0}#footer{font-size:.75em;padding:5px 30px;border-top:1px solid #ddd;text-align:right}#footer p{margin:0 0 0 1em;display:inline-block}#footer p:last-child{margin-right:30px}h1,h2,h3,h4,h5{font-weight:300}h1{font-size:2.5em;line-height:1.1em}h2{font-size:1.75em;margin:1em 0 .50em 0}h3{font-size:1.4em;margin:25px 0 10px 0}h4{margin:0;font-size:105%}h1:target,h2:target,h3:target,h4:target,h5:target,h6:target{background:var(--highlight-color);padding:.2em 0}a{color:#058;text-decoration:none;transition:color .3s ease-in-out}a:hover{color:#e82}.title code{font-weight:bold}h2[id^="header-"]{margin-top:2em}.ident{color:#900}pre code{background:#f8f8f8;font-size:.8em;line-height:1.4em}code{background:#f2f2f1;padding:1px 4px;overflow-wrap:break-word}h1 code{background:transparent}pre{background:#f8f8f8;border:0;border-top:1px solid #ccc;border-bottom:1px solid #ccc;margin:1em 0;padding:1ex}#http-server-module-list{display:flex;flex-flow:column}#http-server-module-list div{display:flex}#http-server-module-list dt{min-width:10%}#http-server-module-list p{margin-top:0}.toc ul,#index{list-style-type:none;margin:0;padding:0}#index code{background:transparent}#index h3{border-bottom:1px solid #ddd}#index ul{padding:0}#index h4{margin-top:.6em;font-weight:bold}@media (min-width:200ex){#index .two-column{column-count:2}}@media (min-width:300ex){#index .two-column{column-count:3}}dl{margin-bottom:2em}dl dl:last-child{margin-bottom:4em}dd{margin:0 0 1em 3em}#header-classes + dl > dd{margin-bottom:3em}dd dd{margin-left:2em}dd p{margin:10px 0}.name{background:#eee;font-weight:bold;font-size:.85em;padding:5px 10px;display:inline-block;min-width:40%}.name:hover{background:#e0e0e0}dt:target .name{background:var(--highlight-color)}.name > span:first-child{white-space:nowrap}.name.class > span:nth-child(2){margin-left:.4em}.inherited{color:#999;border-left:5px solid #eee;padding-left:1em}.inheritance em{font-style:normal;font-weight:bold}.desc h2{font-weight:400;font-size:1.25em}.desc h3{font-size:1em}.desc dt code{background:inherit}.source summary,.git-link-div{color:#666;text-align:right;font-weight:400;font-size:.8em;text-transform:uppercase}.source summary > *{white-space:nowrap;cursor:pointer}.git-link{color:inherit;margin-left:1em}.source pre{max-height:500px;overflow:auto;margin:0}.source pre code{font-size:12px;overflow:visible}.hlist{list-style:none}.hlist li{display:inline}.hlist li:after{content:',\2002'}.hlist li:last-child:after{content:none}.hlist .hlist{display:inline;padding-left:1em}img{max-width:100%}td{padding:0 .5em}.admonition{padding:.1em .5em;margin-bottom:1em}.admonition-title{font-weight:bold}.admonition.note,.admonition.info,.admonition.important{background:#aef}.admonition.todo,.admonition.versionadded,.admonition.tip,.admonition.hint{background:#dfd}.admonition.warning,.admonition.versionchanged,.admonition.deprecated{background:#fd4}.admonition.error,.admonition.danger,.admonition.caution{background:lightpink}</style>

-<style media="screen and (min-width: 700px)">@media screen and (min-width:700px){#sidebar{width:30%;height:100vh;overflow:auto;position:sticky;top:0}#content{width:70%;max-width:100ch;padding:3em 4em;border-left:1px solid #ddd}pre code{font-size:1em}.item .name{font-size:1em}main{display:flex;flex-direction:row-reverse;justify-content:flex-end}.toc ul ul,#index ul{padding-left:1.5em}.toc > ul > li{margin-top:.5em}}</style>

-<style media="print">@media print{#sidebar h1{page-break-before:always}.source{display:none}}@media print{*{background:transparent !important;color:#000 !important;box-shadow:none !important;text-shadow:none !important}a[href]:after{content:" (" attr(href) ")";font-size:90%}a[href][title]:after{content:none}abbr[title]:after{content:" (" attr(title) ")"}.ir a:after,a[href^="javascript:"]:after,a[href^="#"]:after{content:""}pre,blockquote{border:1px solid #999;page-break-inside:avoid}thead{display:table-header-group}tr,img{page-break-inside:avoid}img{max-width:100% !important}@page{margin:0.5cm}p,h2,h3{orphans:3;widows:3}h1,h2,h3,h4,h5,h6{page-break-after:avoid}}</style>

-<script async src="https://cdnjs.cloudflare.com/ajax/libs/mathjax/2.7.7/latest.js?config=TeX-AMS_CHTML" integrity="sha256-kZafAc6mZvK3W3v1pHOcUix30OHQN6pU/NO2oFkqZVw=" crossorigin></script>

-<script defer src="https://cdnjs.cloudflare.com/ajax/libs/highlight.js/10.1.1/highlight.min.js" integrity="sha256-Uv3H6lx7dJmRfRvH8TH6kJD1TSK1aFcwgx+mdg3epi8=" crossorigin></script>

-<script>window.addEventListener('DOMContentLoaded', () => hljs.initHighlighting())</script>

-</head>

-<body>

-<main>

-<article id="content">

-<header>

-<h1 class="title">Module <code>openunmix.cli</code></h1>

-</header>

-<section id="section-intro">

-<details class="source">

-<summary>

-<span>Expand source code</span>

-<a href="https://github.com/sigsep/open-unmix-pytorch/blob/b436d5f7d40c2b8ff0b2500e9d953fa47929b261/openunmix/cli.py#L0-L199" class="git-link">Browse git</a>

-</summary>

-<pre><code class="python">from pathlib import Path

-import torch

-import torchaudio

-import json

-import numpy as np

-

-

-from openunmix import utils

-from openunmix import predict

-from openunmix import data

-

-import argparse

-

-

-def separate():

- parser = argparse.ArgumentParser(

- description="UMX Inference",

- add_help=True,

- formatter_class=argparse.RawDescriptionHelpFormatter,

- )

-

- parser.add_argument("input", type=str, nargs="+", help="List of paths to wav/flac files.")

-

- parser.add_argument(

- "--model",

- default="umxhq",

- type=str,

- help="path to mode base directory of pretrained models",

- )

-

- parser.add_argument(

- "--targets",

- nargs="+",

- type=str,

- help="provide targets to be processed. \

- If none, all available targets will be computed",

- )

-

- parser.add_argument(

- "--outdir",

- type=str,

- help="Results path where audio evaluation results are stored",

- )

-

- parser.add_argument(

- "--ext",

- type=str,

- default=".wav",

- help="Output extension which sets the audio format",

- )

-

- parser.add_argument("--start", type=float, default=0.0, help="Audio chunk start in seconds")

-

- parser.add_argument(

- "--duration",

- type=float,

- help="Audio chunk duration in seconds, negative values load full track",

- )

-

- parser.add_argument(

- "--no-cuda", action="store_true", default=False, help="disables CUDA inference"

- )

-

- parser.add_argument(

- "--audio-backend",

- type=str,

- default="sox_io",

- help="Set torchaudio backend "

- "(`sox_io`, `sox`, `soundfile` or `stempeg`), defaults to `sox_io`",

- )

-

- parser.add_argument(

- "--niter",

- type=int,

- default=1,

- help="number of iterations for refining results.",

- )

-

- parser.add_argument(

- "--wiener-win-len",

- type=int,

- default=300,

- help="Number of frames on which to apply filtering independently",

- )

-

- parser.add_argument(

- "--residual",

- type=str,

- default=None,

- help="if provided, build a source with given name"

- "for the mix minus all estimated targets",

- )

-

- parser.add_argument(

- "--aggregate",

- type=str,

- default=None,

- help="if provided, must be a string containing a valid expression for "

- "a dictionary, with keys as output target names, and values "

- "a list of targets that are used to build it. For instance: "

- '\'{"vocals":["vocals"], "accompaniment":["drums",'

- '"bass","other"]}\'',

- )

-

- parser.add_argument(

- "--filterbank",

- type=str,

- default="torch",

- help="filterbank implementation method. "

- "Supported: `['torch', 'asteroid']`. `torch` is ~30% faster"

- "compared to `asteroid` on large FFT sizes such as 4096. However"

- "asteroids stft can be exported to onnx, which makes is practical"

- "for deployment.",

- )

- args = parser.parse_args()

- torchaudio.USE_SOUNDFILE_LEGACY_INTERFACE = False

-

- if args.audio_backend != "stempeg":

- torchaudio.set_audio_backend(args.audio_backend)

-

- use_cuda = not args.no_cuda and torch.cuda.is_available()

- device = torch.device("cuda" if use_cuda else "cpu")

-

- # parsing the output dict

- aggregate_dict = None if args.aggregate is None else json.loads(args.aggregate)

-

- # create separator only once to reduce model loading

- # when using multiple files

- separator = utils.load_separator(

- model_str_or_path=args.model,

- targets=args.targets,

- niter=args.niter,

- residual=args.residual,

- wiener_win_len=args.wiener_win_len,

- device=device,

- pretrained=True,

- filterbank=args.filterbank,

- )

-

- separator.freeze()

- separator.to(device)

-

- if args.audio_backend == "stempeg":

- try:

- import stempeg

- except ImportError:

- raise RuntimeError("Please install pip package `stempeg`")

-

- # loop over the files

- for input_file in args.input:

- if args.audio_backend == "stempeg":

- audio, rate = stempeg.read_stems(

- input_file,

- start=args.start,

- duration=args.duration,

- sample_rate=separator.sample_rate,

- dtype=np.float32,

- )

- audio = torch.tensor(audio)

- else:

- audio, rate = data.load_audio(input_file, start=args.start, dur=args.duration)

- estimates = predict.separate(

- audio=audio,

- rate=rate,

- aggregate_dict=aggregate_dict,

- separator=separator,

- device=device,

- )

- if not args.outdir:

- model_path = Path(args.model)

- if not model_path.exists():

- outdir = Path(Path(input_file).stem + "_" + args.model)

- else:

- outdir = Path(Path(input_file).stem + "_" + model_path.stem)

- else:

- outdir = Path(args.outdir)

- outdir.mkdir(exist_ok=True, parents=True)

-

- # write out estimates

- if args.audio_backend == "stempeg":

- target_path = str(outdir / Path("target").with_suffix(args.ext))

- # convert torch dict to numpy dict

- estimates_numpy = {}

- for target, estimate in estimates.items():

- estimates_numpy[target] = torch.squeeze(estimate).detach().numpy().T

-

- stempeg.write_stems(

- target_path,

- estimates_numpy,

- sample_rate=separator.sample_rate,

- writer=stempeg.FilesWriter(multiprocess=True, output_sample_rate=rate),

- )

- else:

- for target, estimate in estimates.items():

- target_path = str(outdir / Path(target).with_suffix(args.ext))

- torchaudio.save(

- target_path,

- torch.squeeze(estimate).to("cpu"),

- sample_rate=separator.sample_rate,

- )</code></pre>

-</details>

-</section>

-<section>

-</section>

-<section>

-</section>

-<section>

-<h2 class="section-title" id="header-functions">Functions</h2>

-<dl>

-<dt id="openunmix.cli.separate"><code class="name flex">

-<span>def <span class="ident">separate</span></span>(<span>)</span>

-</code></dt>

-<dd>

-<div class="desc"></div>

-<details class="source">

-<summary>

-<span>Expand source code</span>

-<a href="https://github.com/sigsep/open-unmix-pytorch/blob/b436d5f7d40c2b8ff0b2500e9d953fa47929b261/openunmix/cli.py#L15-L200" class="git-link">Browse git</a>

-</summary>

-<pre><code class="python">def separate():

- parser = argparse.ArgumentParser(

- description="UMX Inference",

- add_help=True,

- formatter_class=argparse.RawDescriptionHelpFormatter,

- )

-

- parser.add_argument("input", type=str, nargs="+", help="List of paths to wav/flac files.")

-

- parser.add_argument(

- "--model",

- default="umxhq",

- type=str,

- help="path to mode base directory of pretrained models",

- )

-

- parser.add_argument(

- "--targets",

- nargs="+",

- type=str,

- help="provide targets to be processed. \

- If none, all available targets will be computed",

- )

-

- parser.add_argument(

- "--outdir",

- type=str,

- help="Results path where audio evaluation results are stored",

- )

-

- parser.add_argument(

- "--ext",

- type=str,

- default=".wav",

- help="Output extension which sets the audio format",

- )

-

- parser.add_argument("--start", type=float, default=0.0, help="Audio chunk start in seconds")

-

- parser.add_argument(

- "--duration",

- type=float,

- help="Audio chunk duration in seconds, negative values load full track",

- )

-

- parser.add_argument(

- "--no-cuda", action="store_true", default=False, help="disables CUDA inference"

- )

-

- parser.add_argument(

- "--audio-backend",

- type=str,

- default="sox_io",

- help="Set torchaudio backend "

- "(`sox_io`, `sox`, `soundfile` or `stempeg`), defaults to `sox_io`",

- )

-

- parser.add_argument(

- "--niter",

- type=int,

- default=1,

- help="number of iterations for refining results.",

- )

-

- parser.add_argument(

- "--wiener-win-len",

- type=int,

- default=300,

- help="Number of frames on which to apply filtering independently",

- )

-

- parser.add_argument(

- "--residual",

- type=str,

- default=None,

- help="if provided, build a source with given name"

- "for the mix minus all estimated targets",

- )

-

- parser.add_argument(

- "--aggregate",

- type=str,

- default=None,

- help="if provided, must be a string containing a valid expression for "

- "a dictionary, with keys as output target names, and values "

- "a list of targets that are used to build it. For instance: "

- '\'{"vocals":["vocals"], "accompaniment":["drums",'

- '"bass","other"]}\'',

- )

-

- parser.add_argument(

- "--filterbank",

- type=str,

- default="torch",

- help="filterbank implementation method. "

- "Supported: `['torch', 'asteroid']`. `torch` is ~30% faster"

- "compared to `asteroid` on large FFT sizes such as 4096. However"

- "asteroids stft can be exported to onnx, which makes is practical"

- "for deployment.",

- )

- args = parser.parse_args()

- torchaudio.USE_SOUNDFILE_LEGACY_INTERFACE = False

-

- if args.audio_backend != "stempeg":

- torchaudio.set_audio_backend(args.audio_backend)

-

- use_cuda = not args.no_cuda and torch.cuda.is_available()

- device = torch.device("cuda" if use_cuda else "cpu")

-

- # parsing the output dict

- aggregate_dict = None if args.aggregate is None else json.loads(args.aggregate)

-

- # create separator only once to reduce model loading

- # when using multiple files

- separator = utils.load_separator(

- model_str_or_path=args.model,

- targets=args.targets,

- niter=args.niter,

- residual=args.residual,

- wiener_win_len=args.wiener_win_len,

- device=device,

- pretrained=True,

- filterbank=args.filterbank,

- )

-

- separator.freeze()

- separator.to(device)

-

- if args.audio_backend == "stempeg":

- try:

- import stempeg

- except ImportError:

- raise RuntimeError("Please install pip package `stempeg`")

-

- # loop over the files

- for input_file in args.input:

- if args.audio_backend == "stempeg":

- audio, rate = stempeg.read_stems(

- input_file,

- start=args.start,

- duration=args.duration,

- sample_rate=separator.sample_rate,

- dtype=np.float32,

- )

- audio = torch.tensor(audio)

- else:

- audio, rate = data.load_audio(input_file, start=args.start, dur=args.duration)

- estimates = predict.separate(

- audio=audio,

- rate=rate,

- aggregate_dict=aggregate_dict,

- separator=separator,

- device=device,

- )

- if not args.outdir:

- model_path = Path(args.model)

- if not model_path.exists():

- outdir = Path(Path(input_file).stem + "_" + args.model)

- else:

- outdir = Path(Path(input_file).stem + "_" + model_path.stem)

- else:

- outdir = Path(args.outdir)

- outdir.mkdir(exist_ok=True, parents=True)

-

- # write out estimates

- if args.audio_backend == "stempeg":

- target_path = str(outdir / Path("target").with_suffix(args.ext))

- # convert torch dict to numpy dict

- estimates_numpy = {}

- for target, estimate in estimates.items():

- estimates_numpy[target] = torch.squeeze(estimate).detach().numpy().T

-

- stempeg.write_stems(

- target_path,

- estimates_numpy,

- sample_rate=separator.sample_rate,

- writer=stempeg.FilesWriter(multiprocess=True, output_sample_rate=rate),

- )

- else:

- for target, estimate in estimates.items():

- target_path = str(outdir / Path(target).with_suffix(args.ext))

- torchaudio.save(

- target_path,

- torch.squeeze(estimate).to("cpu"),

- sample_rate=separator.sample_rate,

- )</code></pre>

-</details>

-</dd>

-</dl>

-</section>

-<section>

-</section>

-</article>

-<nav id="sidebar">

-<h1>Index</h1>

-<div class="toc">

-<ul></ul>

-</div>

-<ul id="index">

-<li><h3>Super-module</h3>

-<ul>

-<li><code><a title="openunmix" href="index.html">openunmix</a></code></li>

-</ul>

-</li>

-<li><h3><a href="#header-functions">Functions</a></h3>

-<ul class="">

-<li><code><a title="openunmix.cli.separate" href="#openunmix.cli.separate">separate</a></code></li>

-</ul>

-</li>

-</ul>

-</nav>

-</main>

-<footer id="footer">

-<p>Generated by <a href="https://pdoc3.github.io/pdoc"><cite>pdoc</cite> 0.9.2</a>.</p>

-</footer>

-</body>

-</html>

\ No newline at end of file

diff --git a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/docs/data.html b/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/docs/data.html

deleted file mode 100644

index a7be05f24455acca523bbc7695ac69749e5a435b..0000000000000000000000000000000000000000

--- a/my_submssion/openunmix-baseline/sigsep_open-unmix-pytorch_master/docs/data.html

+++ /dev/null

@@ -1,2449 +0,0 @@

-<!doctype html>

-<html lang="en">

-<head>

-<meta charset="utf-8">

-<meta name="viewport" content="width=device-width, initial-scale=1, minimum-scale=1" />

-<meta name="generator" content="pdoc 0.9.2" />

-<title>openunmix.data API documentation</title>

-<meta name="description" content="" />

-<link rel="preload stylesheet" as="style" href="https://cdnjs.cloudflare.com/ajax/libs/10up-sanitize.css/11.0.1/sanitize.min.css" integrity="sha256-PK9q560IAAa6WVRRh76LtCaI8pjTJ2z11v0miyNNjrs=" crossorigin>

-<link rel="preload stylesheet" as="style" href="https://cdnjs.cloudflare.com/ajax/libs/10up-sanitize.css/11.0.1/typography.min.css" integrity="sha256-7l/o7C8jubJiy74VsKTidCy1yBkRtiUGbVkYBylBqUg=" crossorigin>

-<link rel="stylesheet preload" as="style" href="https://cdnjs.cloudflare.com/ajax/libs/highlight.js/10.1.1/styles/github.min.css" crossorigin>

-<style>:root{--highlight-color:#fe9}.flex{display:flex !important}body{line-height:1.5em}#content{padding:20px}#sidebar{padding:30px;overflow:hidden}#sidebar > *:last-child{margin-bottom:2cm}.http-server-breadcrumbs{font-size:130%;margin:0 0 15px 0}#footer{font-size:.75em;padding:5px 30px;border-top:1px solid #ddd;text-align:right}#footer p{margin:0 0 0 1em;display:inline-block}#footer p:last-child{margin-right:30px}h1,h2,h3,h4,h5{font-weight:300}h1{font-size:2.5em;line-height:1.1em}h2{font-size:1.75em;margin:1em 0 .50em 0}h3{font-size:1.4em;margin:25px 0 10px 0}h4{margin:0;font-size:105%}h1:target,h2:target,h3:target,h4:target,h5:target,h6:target{background:var(--highlight-color);padding:.2em 0}a{color:#058;text-decoration:none;transition:color .3s ease-in-out}a:hover{color:#e82}.title code{font-weight:bold}h2[id^="header-"]{margin-top:2em}.ident{color:#900}pre code{background:#f8f8f8;font-size:.8em;line-height:1.4em}code{background:#f2f2f1;padding:1px 4px;overflow-wrap:break-word}h1 code{background:transparent}pre{background:#f8f8f8;border:0;border-top:1px solid #ccc;border-bottom:1px solid #ccc;margin:1em 0;padding:1ex}#http-server-module-list{display:flex;flex-flow:column}#http-server-module-list div{display:flex}#http-server-module-list dt{min-width:10%}#http-server-module-list p{margin-top:0}.toc ul,#index{list-style-type:none;margin:0;padding:0}#index code{background:transparent}#index h3{border-bottom:1px solid #ddd}#index ul{padding:0}#index h4{margin-top:.6em;font-weight:bold}@media (min-width:200ex){#index .two-column{column-count:2}}@media (min-width:300ex){#index .two-column{column-count:3}}dl{margin-bottom:2em}dl dl:last-child{margin-bottom:4em}dd{margin:0 0 1em 3em}#header-classes + dl > dd{margin-bottom:3em}dd dd{margin-left:2em}dd p{margin:10px 0}.name{background:#eee;font-weight:bold;font-size:.85em;padding:5px 10px;display:inline-block;min-width:40%}.name:hover{background:#e0e0e0}dt:target .name{background:var(--highlight-color)}.name > span:first-child{white-space:nowrap}.name.class > span:nth-child(2){margin-left:.4em}.inherited{color:#999;border-left:5px solid #eee;padding-left:1em}.inheritance em{font-style:normal;font-weight:bold}.desc h2{font-weight:400;font-size:1.25em}.desc h3{font-size:1em}.desc dt code{background:inherit}.source summary,.git-link-div{color:#666;text-align:right;font-weight:400;font-size:.8em;text-transform:uppercase}.source summary > *{white-space:nowrap;cursor:pointer}.git-link{color:inherit;margin-left:1em}.source pre{max-height:500px;overflow:auto;margin:0}.source pre code{font-size:12px;overflow:visible}.hlist{list-style:none}.hlist li{display:inline}.hlist li:after{content:',\2002'}.hlist li:last-child:after{content:none}.hlist .hlist{display:inline;padding-left:1em}img{max-width:100%}td{padding:0 .5em}.admonition{padding:.1em .5em;margin-bottom:1em}.admonition-title{font-weight:bold}.admonition.note,.admonition.info,.admonition.important{background:#aef}.admonition.todo,.admonition.versionadded,.admonition.tip,.admonition.hint{background:#dfd}.admonition.warning,.admonition.versionchanged,.admonition.deprecated{background:#fd4}.admonition.error,.admonition.danger,.admonition.caution{background:lightpink}</style>

-<style media="screen and (min-width: 700px)">@media screen and (min-width:700px){#sidebar{width:30%;height:100vh;overflow:auto;position:sticky;top:0}#content{width:70%;max-width:100ch;padding:3em 4em;border-left:1px solid #ddd}pre code{font-size:1em}.item .name{font-size:1em}main{display:flex;flex-direction:row-reverse;justify-content:flex-end}.toc ul ul,#index ul{padding-left:1.5em}.toc > ul > li{margin-top:.5em}}</style>

-<style media="print">@media print{#sidebar h1{page-break-before:always}.source{display:none}}@media print{*{background:transparent !important;color:#000 !important;box-shadow:none !important;text-shadow:none !important}a[href]:after{content:" (" attr(href) ")";font-size:90%}a[href][title]:after{content:none}abbr[title]:after{content:" (" attr(title) ")"}.ir a:after,a[href^="javascript:"]:after,a[href^="#"]:after{content:""}pre,blockquote{border:1px solid #999;page-break-inside:avoid}thead{display:table-header-group}tr,img{page-break-inside:avoid}img{max-width:100% !important}@page{margin:0.5cm}p,h2,h3{orphans:3;widows:3}h1,h2,h3,h4,h5,h6{page-break-after:avoid}}</style>

-<script async src="https://cdnjs.cloudflare.com/ajax/libs/mathjax/2.7.7/latest.js?config=TeX-AMS_CHTML" integrity="sha256-kZafAc6mZvK3W3v1pHOcUix30OHQN6pU/NO2oFkqZVw=" crossorigin></script>

-<script defer src="https://cdnjs.cloudflare.com/ajax/libs/highlight.js/10.1.1/highlight.min.js" integrity="sha256-Uv3H6lx7dJmRfRvH8TH6kJD1TSK1aFcwgx+mdg3epi8=" crossorigin></script>

-<script>window.addEventListener('DOMContentLoaded', () => hljs.initHighlighting())</script>

-</head>

-<body>

-<main>

-<article id="content">

-<header>

-<h1 class="title">Module <code>openunmix.data</code></h1>

-</header>

-<section id="section-intro">

-<details class="source">

-<summary>

-<span>Expand source code</span>

-<a href="https://github.com/sigsep/open-unmix-pytorch/blob/b436d5f7d40c2b8ff0b2500e9d953fa47929b261/openunmix/data.py#L0-L974" class="git-link">Browse git</a>

-</summary>

-<pre><code class="python">import argparse

-import random

-from pathlib import Path

-from typing import Optional, Union, Tuple, List, Any, Callable

-

-import torch

-import torch.utils.data

-import torchaudio

-import tqdm

-from torchaudio.datasets.utils import bg_iterator

-

-

-def load_info(path: str) -> dict:

- """Load audio metadata

-

- this is a backend_independent wrapper around torchaudio.info

-

- Args:

- path: Path of filename

- Returns:

- Dict: Metadata with

- `samplerate`, `samples` and `duration` in seconds

-

- """

- # get length of file in samples

- if torchaudio.get_audio_backend() == "sox":

- raise RuntimeError("Deprecated backend is not supported")

-

- info = {}

- si = torchaudio.info(str(path))

- info["samplerate"] = si.sample_rate

- info["samples"] = si.num_frames

- info["channels"] = si.num_channels

- info["duration"] = info["samples"] / info["samplerate"]

- return info

-

-

-def load_audio(

- path: str,

- start: float = 0.0,

- dur: Optional[float] = None,

- info: Optional[dict] = None,

-):

- """Load audio file

-

- Args:

- path: Path of audio file

- start: start position in seconds, defaults on the beginning.

- dur: end position in seconds, defaults to `None` (full file).

- info: metadata object as called from `load_info`.

-

- Returns:

- Tensor: torch tensor waveform of shape `(num_channels, num_samples)`

- """

- # loads the full track duration

- if dur is None:

- # we ignore the case where start!=0 and dur=None

- # since we have to deal with fixed length audio

- sig, rate = torchaudio.load(path)

- return sig, rate

- else:

- if info is None:

- info = load_info(path)

- num_frames = int(dur * info["samplerate"])

- frame_offset = int(start * info["samplerate"])

- sig, rate = torchaudio.load(path, num_frames=num_frames, frame_offset=frame_offset)

- return sig, rate

-

-

-def aug_from_str(list_of_function_names: list):

- if list_of_function_names:

- return Compose([globals()["_augment_" + aug] for aug in list_of_function_names])

- else:

- return lambda audio: audio

-

-

-class Compose(object):

- """Composes several augmentation transforms.

- Args:

- augmentations: list of augmentations to compose.

- """

-

- def __init__(self, transforms):

- self.transforms = transforms

-

- def __call__(self, audio: torch.Tensor) -> torch.Tensor:

- for t in self.transforms:

- audio = t(audio)

- return audio

-

-

-def _augment_gain(audio: torch.Tensor, low: float = 0.25, high: float = 1.25) -> torch.Tensor:

- """Applies a random gain between `low` and `high`"""

- g = low + torch.rand(1) * (high - low)

- return audio * g

-

-

-def _augment_channelswap(audio: torch.Tensor) -> torch.Tensor:

- """Swap channels of stereo signals with a probability of p=0.5"""

- if audio.shape[0] == 2 and torch.tensor(1.0).uniform_() < 0.5:

- return torch.flip(audio, [0])

- else:

- return audio

-

-

-def _augment_force_stereo(audio: torch.Tensor) -> torch.Tensor:

- # for multichannel > 2, we drop the other channels

- if audio.shape[0] > 2:

- audio = audio[:2, ...]

-

- if audio.shape[0] == 1:

- # if we have mono, we duplicate it to get stereo

- audio = torch.repeat_interleave(audio, 2, dim=0)

-

- return audio

-

-

-class UnmixDataset(torch.utils.data.Dataset):

- _repr_indent = 4

-

- def __init__(

- self,

- root: Union[Path, str],

- sample_rate: float,

- seq_duration: Optional[float] = None,

- source_augmentations: Optional[Callable] = None,

- ) -> None:

- self.root = Path(args.root).expanduser()

- self.sample_rate = sample_rate

- self.seq_duration = seq_duration

- self.source_augmentations = source_augmentations

-

- def __getitem__(self, index: int) -> Any:

- raise NotImplementedError

-

- def __len__(self) -> int:

- raise NotImplementedError

-

- def __repr__(self) -> str:

- head = "Dataset " + self.__class__.__name__

- body = ["Number of datapoints: {}".format(self.__len__())]

- body += self.extra_repr().splitlines()

- lines = [head] + [" " * self._repr_indent + line for line in body]

- return "\n".join(lines)

-

- def extra_repr(self) -> str:

- return ""

-

-

-def load_datasets(

- parser: argparse.ArgumentParser, args: argparse.Namespace

-) -> Tuple[UnmixDataset, UnmixDataset, argparse.Namespace]:

- """Loads the specified dataset from commandline arguments

-

- Returns:

- train_dataset, validation_dataset

- """

- if args.dataset == "aligned":

- parser.add_argument("--input-file", type=str)

- parser.add_argument("--output-file", type=str)

-

- args = parser.parse_args()

- # set output target to basename of output file

- args.target = Path(args.output_file).stem

-

- dataset_kwargs = {

- "root": Path(args.root),

- "seq_duration": args.seq_dur,

- "input_file": args.input_file,

- "output_file": args.output_file,

- }

- args.target = Path(args.output_file).stem

- train_dataset = AlignedDataset(

- split="train", random_chunks=True, **dataset_kwargs

- ) # type: UnmixDataset

- valid_dataset = AlignedDataset(split="valid", **dataset_kwargs) # type: UnmixDataset

-

- elif args.dataset == "sourcefolder":

- parser.add_argument("--interferer-dirs", type=str, nargs="+")

- parser.add_argument("--target-dir", type=str)

- parser.add_argument("--ext", type=str, default=".wav")

- parser.add_argument("--nb-train-samples", type=int, default=1000)

- parser.add_argument("--nb-valid-samples", type=int, default=100)

- parser.add_argument("--source-augmentations", type=str, nargs="+")

- args = parser.parse_args()

- args.target = args.target_dir

-

- dataset_kwargs = {

- "root": Path(args.root),

- "interferer_dirs": args.interferer_dirs,

- "target_dir": args.target_dir,

- "ext": args.ext,

- }

-

- source_augmentations = aug_from_str(args.source_augmentations)

-

- train_dataset = SourceFolderDataset(

- split="train",

- source_augmentations=source_augmentations,

- random_chunks=True,

- nb_samples=args.nb_train_samples,

- seq_duration=args.seq_dur,

- **dataset_kwargs,

- )

-

- valid_dataset = SourceFolderDataset(

- split="valid",

- random_chunks=True,

- seq_duration=args.seq_dur,

- nb_samples=args.nb_valid_samples,

- **dataset_kwargs,

- )

-

- elif args.dataset == "trackfolder_fix":

- parser.add_argument("--target-file", type=str)

- parser.add_argument("--interferer-files", type=str, nargs="+")

- parser.add_argument(

- "--random-track-mix",

- action="store_true",

- default=False,

- help="Apply random track mixing augmentation",

- )

- parser.add_argument("--source-augmentations", type=str, nargs="+")

-

- args = parser.parse_args()

- args.target = Path(args.target_file).stem

-

- dataset_kwargs = {

- "root": Path(args.root),

- "interferer_files": args.interferer_files,

- "target_file": args.target_file,

- }

-

- source_augmentations = aug_from_str(args.source_augmentations)

-

- train_dataset = FixedSourcesTrackFolderDataset(

- split="train",

- source_augmentations=source_augmentations,

- random_track_mix=args.random_track_mix,

- random_chunks=True,

- seq_duration=args.seq_dur,

- **dataset_kwargs,

- )

- valid_dataset = FixedSourcesTrackFolderDataset(

- split="valid", seq_duration=None, **dataset_kwargs

- )

-

- elif args.dataset == "trackfolder_var":

- parser.add_argument("--ext", type=str, default=".wav")

- parser.add_argument("--target-file", type=str)

- parser.add_argument("--source-augmentations", type=str, nargs="+")

- parser.add_argument(

- "--random-interferer-mix",

- action="store_true",

- default=False,

- help="Apply random interferer mixing augmentation",

- )

- parser.add_argument(

- "--silence-missing",

- action="store_true",

- default=False,

- help="silence missing targets",

- )

-

- args = parser.parse_args()

- args.target = Path(args.target_file).stem

-

- dataset_kwargs = {

- "root": Path(args.root),