Showing

- runs_bench/Jan18_16-14-19_K57261_DeadLockAvoidance_full/events.out.tfevents.1610982862.K57261.14612.0 0 additions, 0 deletions...idance_full/events.out.tfevents.1610982862.K57261.14612.0

- runs_bench/Jan18_16-43-41_K57261_DeadLockAvoidanceWithDecision_full/events.out.tfevents.1610984623.K57261.17628.0 0 additions, 0 deletions...cision_full/events.out.tfevents.1610984623.K57261.17628.0

- runs_bench/Jan18_16-45-04_K57261_MultiDecision_full/events.out.tfevents.1610984709.K57261.1796.0 0 additions, 0 deletions...ecision_full/events.out.tfevents.1610984709.K57261.1796.0

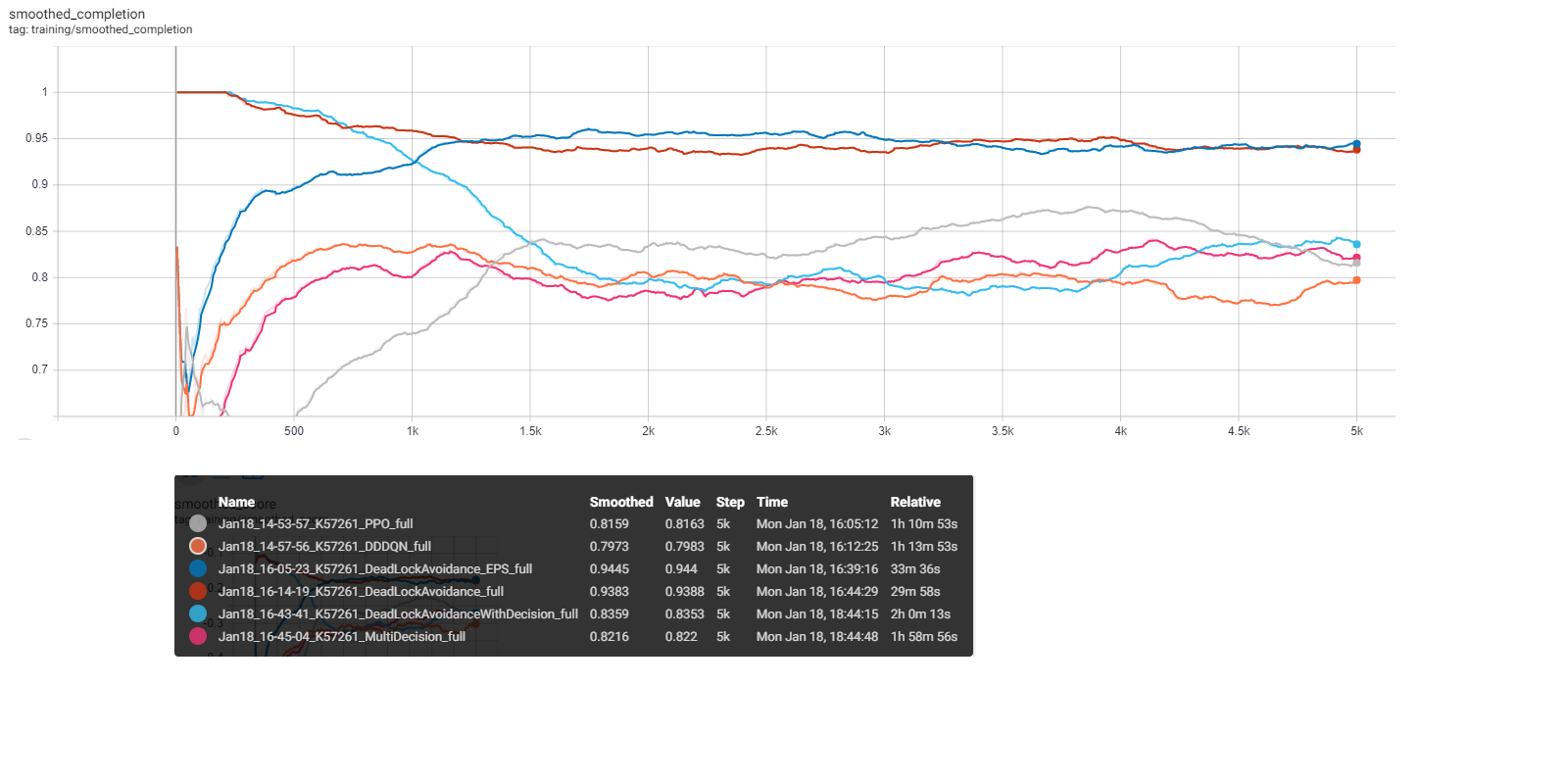

- runs_bench/Screenshots/full.png 0 additions, 0 deletionsruns_bench/Screenshots/full.png

- runs_bench/Screenshots/reduced.png 0 additions, 0 deletionsruns_bench/Screenshots/reduced.png

- utils/agent_action_config.py 73 additions, 0 deletionsutils/agent_action_config.py

- utils/agent_can_choose_helper.py 107 additions, 0 deletionsutils/agent_can_choose_helper.py

- utils/dead_lock_avoidance_agent.py 34 additions, 15 deletionsutils/dead_lock_avoidance_agent.py

- utils/deadlock_check.py 53 additions, 0 deletionsutils/deadlock_check.py

- utils/extra.py 0 additions, 363 deletionsutils/extra.py

- utils/fast_tree_obs.py 86 additions, 144 deletionsutils/fast_tree_obs.py

- utils/shortest_Distance_walker.py 0 additions, 87 deletionsutils/shortest_Distance_walker.py

- utils/shortest_path_walker_heuristic_agent.py 57 additions, 0 deletionsutils/shortest_path_walker_heuristic_agent.py

File added

File added

File added

runs_bench/Screenshots/full.png

0 → 100644

139 KiB

runs_bench/Screenshots/reduced.png

0 → 100644

178 KiB

utils/agent_action_config.py

0 → 100644

utils/agent_can_choose_helper.py

0 → 100644

utils/extra.py

deleted

100644 → 0

utils/shortest_Distance_walker.py

deleted

100644 → 0